AI-Driven Object Manipulation in Film and Entertainment

By Sugar Dashdavaa | Sep 2024

Abstract

Abstract

This research paper explores the potential of AI-driven object manipulation within films and video content, aiming to enhance viewer engagement by offering personalized, real-time alterations. This study investigates existing technologies and methods to manipulate objects on screen and analyzes the technical, ethical, and legal implications of implementing such interactive features. It also considers the feasibility of developing a system that allows viewers to dynamically alter characters, objects, and environments as they watch and contributing to a unique, immersive viewing experience.

This research paper explores the potential of AI-driven object manipulation within films and video content, aiming to enhance viewer engagement by offering personalized, real-time alterations. This study investigates existing technologies and methods to manipulate objects on screen and analyzes the technical, ethical, and legal implications of implementing such interactive features. It also considers the feasibility of developing a system that allows viewers to dynamically alter characters, objects, and environments as they watch and contributing to a unique, immersive viewing experience.

Keywords

Keywords

Object-manipulation, viewer engagement, Convolutional Neural Networks (CNNs), Generative Adversarial Networks (GANs), Edge Computing, Real-time Rendering, Deepfake technology, AR, VR.

Object-manipulation, viewer engagement, Convolutional Neural Networks (CNNs), Generative Adversarial Networks (GANs), Edge Computing, Real-time Rendering, Deepfake technology, AR, VR.

Introduction

Introduction

The recent advancement of artificial intelligence (AI) has brought a new era in the film and entertainment industry, where innovative technologies powered by AI are redefining the experience of films and entertainment. Traditionally, films are regarded as static, linear experiences in which audiences passively observe and watch content created by directors and filmmakers. However, emerging AI-driven technologies now have the potential to significantly alter this pattern, allowing audiences to interact and personalize content in real-time, as they are utilized in various sectors, including music, gaming, and virtual reality (Nautiyal et al., 2023). Specifically, the ability to manipulate objects within a film—such as characters, backgrounds, or objects, is now more than achievable due to the advancement of AI.

This research investigates the concept of AI-driven object manipulation within films and videos and studying both current technological capabilities and the feasibility of creating a customizable viewing experience. It also examines the legal and ethical considerations surrounding object modification in cinematic content regarding copyright and intellectual property (IP) issues. With a review of current implementations, and theoretical models, this research aims to find a foundational understanding of how AI-driven manipulation of film elements can transform films and entertainment in a new way.

The recent advancement of artificial intelligence (AI) has brought a new era in the film and entertainment industry, where innovative technologies powered by AI are redefining the experience of films and entertainment. Traditionally, films are regarded as static, linear experiences in which audiences passively observe and watch content created by directors and filmmakers. However, emerging AI-driven technologies now have the potential to significantly alter this pattern, allowing audiences to interact and personalize content in real-time, as they are utilized in various sectors, including music, gaming, and virtual reality (Nautiyal et al., 2023). Specifically, the ability to manipulate objects within a film—such as characters, backgrounds, or objects, is now more than achievable due to the advancement of AI.

This research investigates the concept of AI-driven object manipulation within films and videos and studying both current technological capabilities and the feasibility of creating a customizable viewing experience. It also examines the legal and ethical considerations surrounding object modification in cinematic content regarding copyright and intellectual property (IP) issues. With a review of current implementations, and theoretical models, this research aims to find a foundational understanding of how AI-driven manipulation of film elements can transform films and entertainment in a new way.

Existing AI Applications in film

Existing AI Applications in film

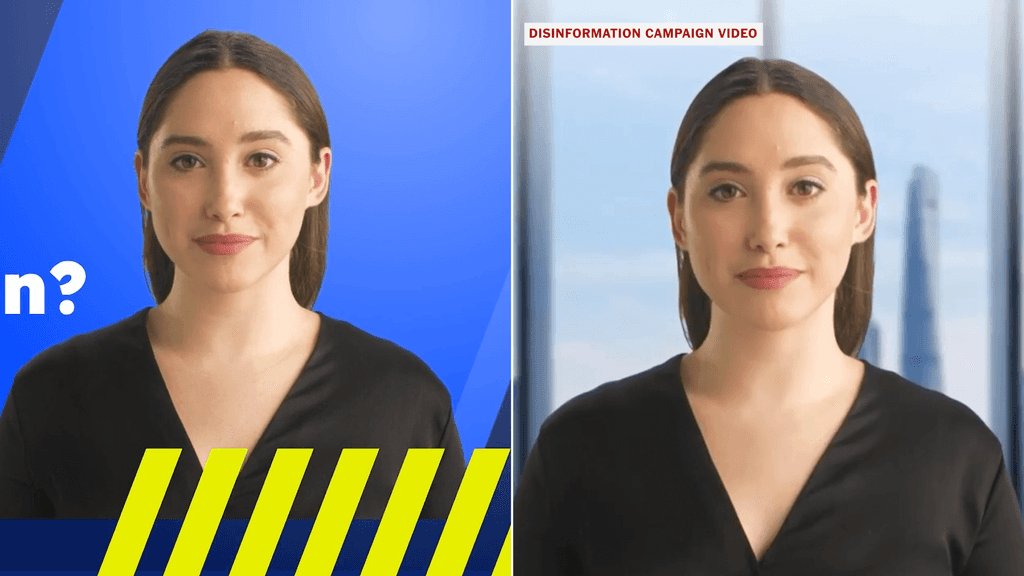

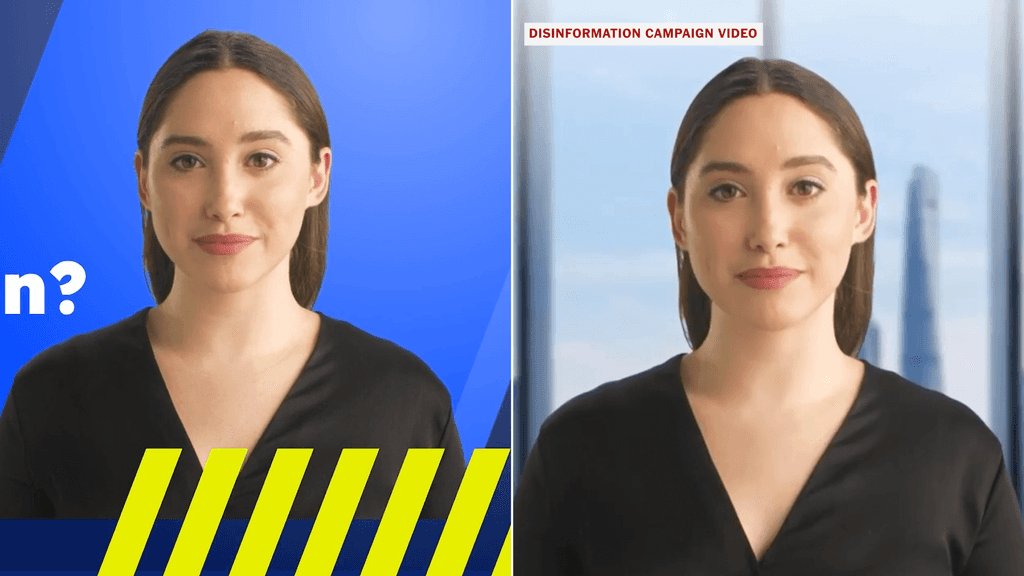

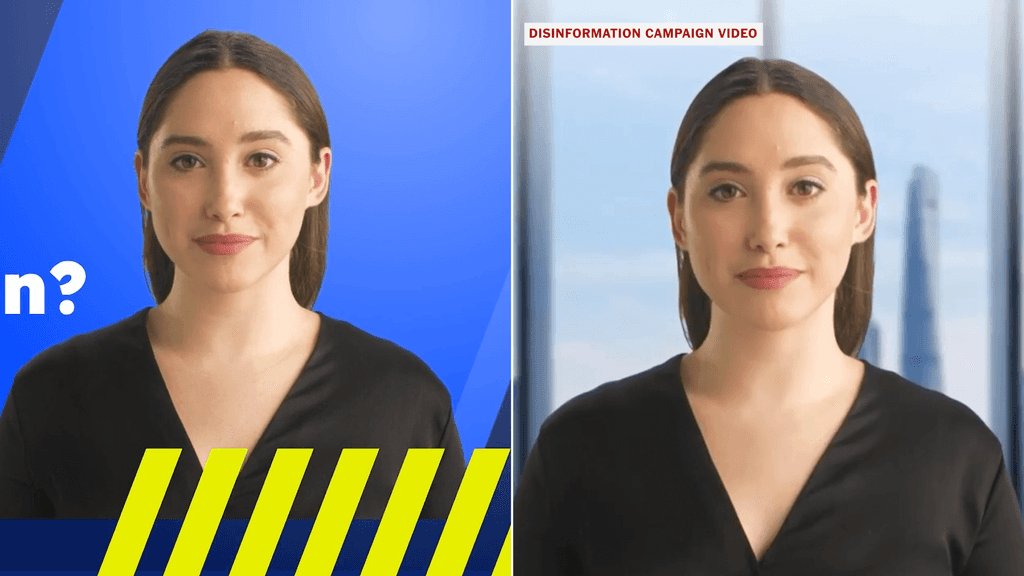

As the emergence of AI has taken place, AI-driven techniques have brought new opportunities, including deepfake technology, which enables facial expressions to be altered or transformed. Deepfake technology has emerged as a powerful tool in filmmaking and content creation, enhancing visual effects and enabling creative storytelling (Lees, 2023). However, its advancement raises significant ethical concerns, particularly in documentary filmmaking where it challenges the representation of reality (Lees, 2023).

These tools provide AI’s ability to create transformations that maintain the original reality of the media, proving that how advanced the integration of AI in visual modification has become.

As the emergence of AI has taken place, AI-driven techniques have brought new opportunities, including deepfake technology, which enables facial expressions to be altered or transformed. Deepfake technology has emerged as a powerful tool in filmmaking and content creation, enhancing visual effects and enabling creative storytelling (Lees, 2023). However, its advancement raises significant ethical concerns, particularly in documentary filmmaking where it challenges the representation of reality (Lees, 2023).

These tools provide AI’s ability to create transformations that maintain the original reality of the media, proving that how advanced the integration of AI in visual modification has become.

Figure 1. Deepfake technology

Figure 1. Deepfake technology

Interactive Media and Video Game

Interactive Media and Video Game

The video game industry has also established a significant foundation for understanding AI’s potential in interactive media. In popular games like The Sims and Cyberpunk 2077, players can customize characters and manipulate objects manually, offering a model for real-time rendering and interactive object manipulation.

These implementations show the potential of AI to create immersive and personalized experiences within virtual environments. However, it is relatively far from implementing the manipulation of objects in films as it is already an established feature of the game.

The video game industry has also established a significant foundation for understanding AI’s potential in interactive media. In popular games like The Sims and Cyberpunk 2077, players can customize characters and manipulate objects manually, offering a model for real-time rendering and interactive object manipulation.

These implementations show the potential of AI to create immersive and personalized experiences within virtual environments. However, it is relatively far from implementing the manipulation of objects in films as it is already an established feature of the game.

Figure 2. Sims 3 - Manipulating objects manually

Figure 2. Sims 3 - Manipulating objects manually

Personalized Content Streams

Personalized Content Streams

Another approach is personalized content and interactive media on streaming services, such as Netflix. Netflix’s Black Mirror: Bandersnatch TV series allows users to influence story direction, demonstrating the feasibility of custom narratives as author Noel puts it “The user has to pay attention to move forward and is directly involved in the storytelling.” (Noel, 2019).

While this implementation focuses on branching storylines rather than object-focused customization, they provide potential personalized media experiences, which can be a step forward for a customized experience.

Another approach is personalized content and interactive media on streaming services, such as Netflix. Netflix’s Black Mirror: Bandersnatch TV series allows users to influence story direction, demonstrating the feasibility of custom narratives as author Noel puts it “The user has to pay attention to move forward and is directly involved in the storytelling.” (Noel, 2019).

While this implementation focuses on branching storylines rather than object-focused customization, they provide potential personalized media experiences, which can be a step forward for a customized experience.

Figure 3. Sims 3 - Netflix's Black Mirror: Bandersnatch

Figure 3. Sims 3 - Netflix's Black Mirror: Bandersnatch

Computer vision and object recognition

Computer vision and object recognition

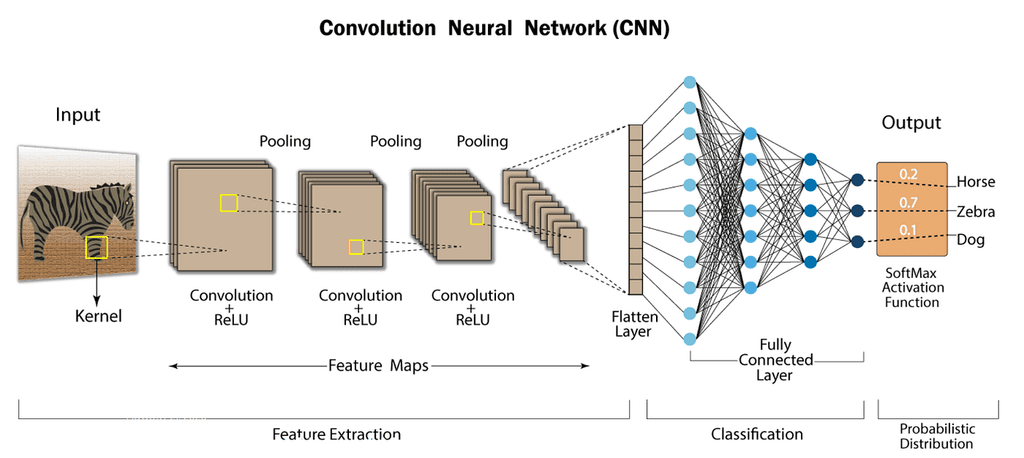

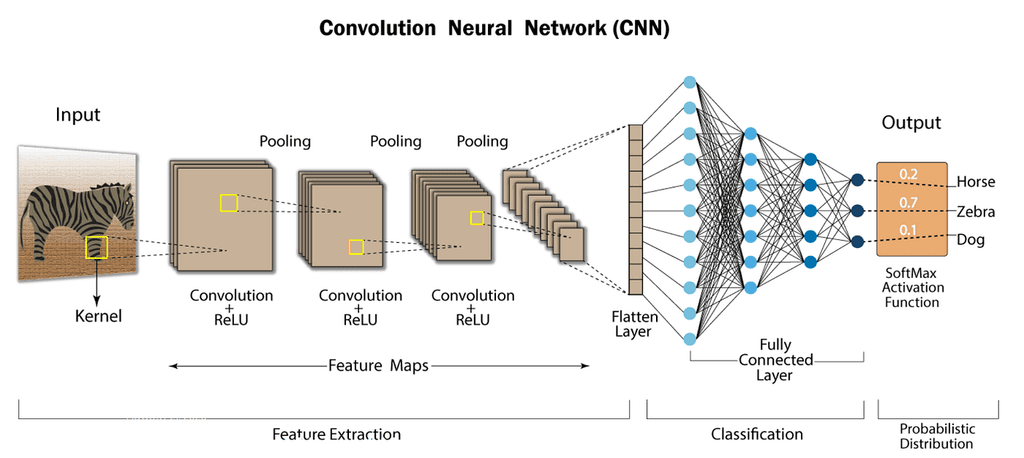

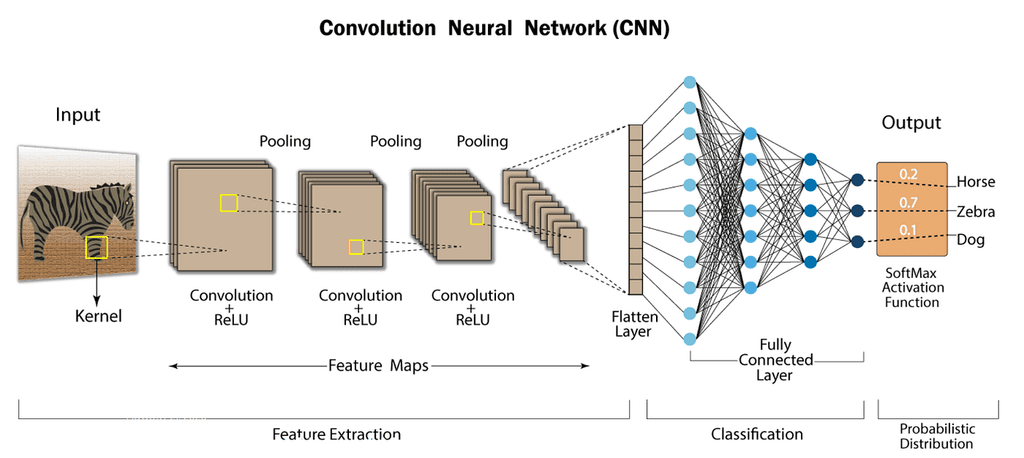

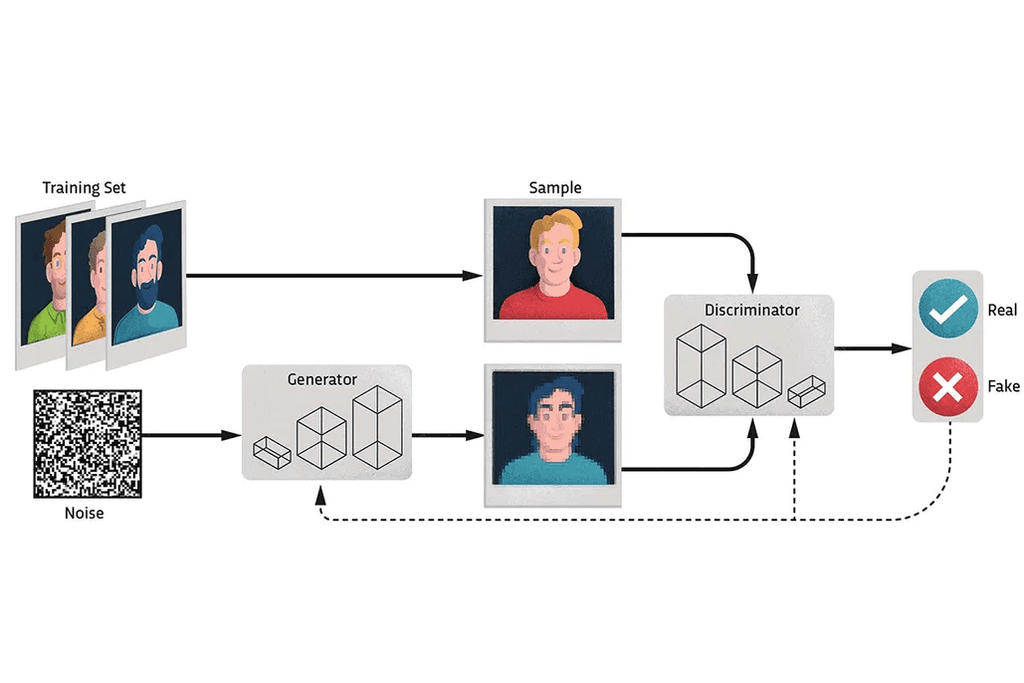

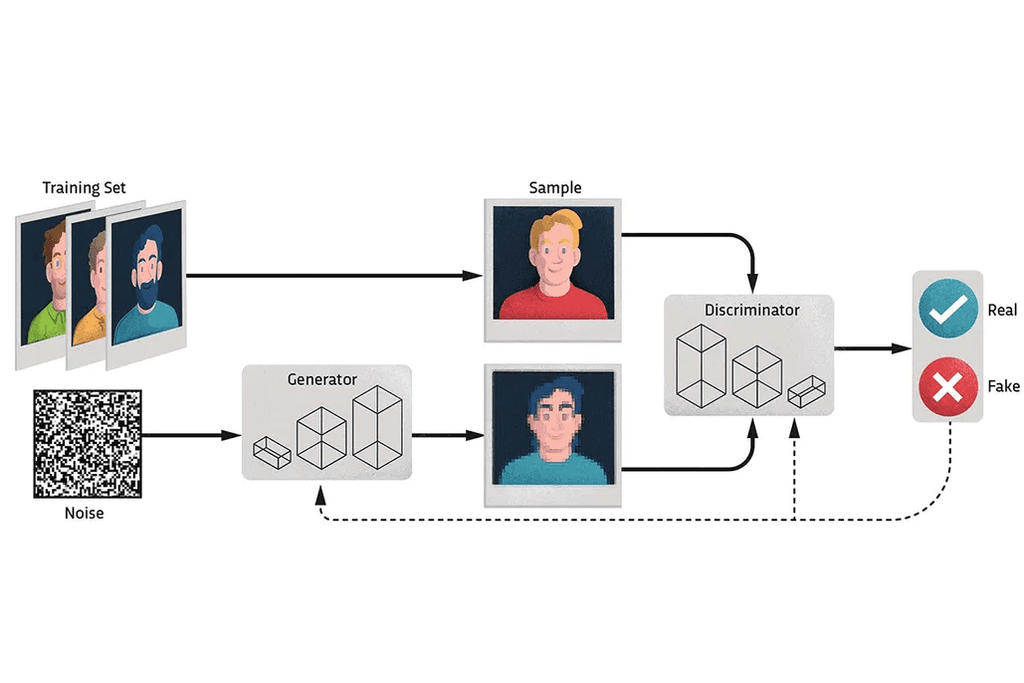

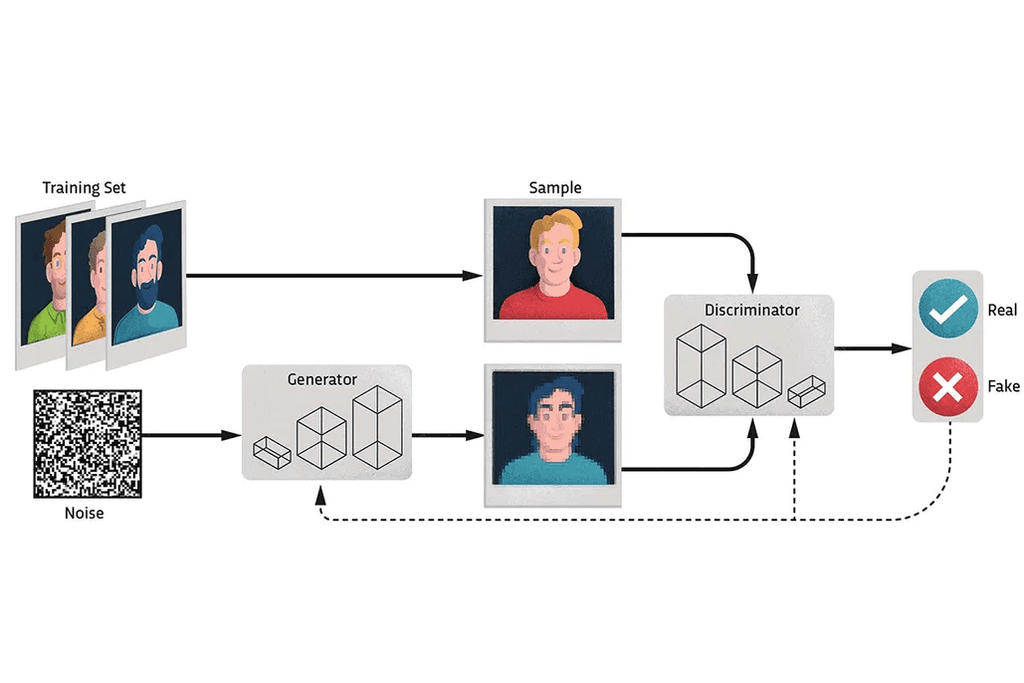

Object recognition in video editing is rapidly evolving with the help of Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs), which can find and identify objects within video frames with different forms of manipulation. These deep-learning models are pivotal in allowing editors to enhance scenes, replace backgrounds, or even swap characters with manual input. CNNs have shown effectiveness in detecting, recognizing, and tracking specific objects in video sequences, with models like TensorFlow SSD and Inception offering high accuracy for object detection and classification (Ashwith A et al., 2022), while GANs create highly realistic renderings, providing editors to modify elements within scenes as authors Wang et al. puts it “High-resolution GANs not only synthesize realistic objects but also allow for semantic manipulation, making them ideal for interactive, real-time applications.” (Wang et al., 2021). These technologies also provide potential in both traditional editing and emerging fields like augmented reality (AR) and virtual reality (VR) editing, where object identification must be precise to enhance immersive user experiences.

Object recognition in video editing is rapidly evolving with the help of Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs), which can find and identify objects within video frames with different forms of manipulation. These deep-learning models are pivotal in allowing editors to enhance scenes, replace backgrounds, or even swap characters with manual input. CNNs have shown effectiveness in detecting, recognizing, and tracking specific objects in video sequences, with models like TensorFlow SSD and Inception offering high accuracy for object detection and classification (Ashwith A et al., 2022), while GANs create highly realistic renderings, providing editors to modify elements within scenes as authors Wang et al. puts it “High-resolution GANs not only synthesize realistic objects but also allow for semantic manipulation, making them ideal for interactive, real-time applications.” (Wang et al., 2021). These technologies also provide potential in both traditional editing and emerging fields like augmented reality (AR) and virtual reality (VR) editing, where object identification must be precise to enhance immersive user experiences.

Figure 4. Convolutional Neural Networks (CNNs)

Figure 4. Convolutional Neural Networks (CNNs)

Figure 5. Generative Adversarial Networks (GANs)

Figure 5. Generative Adversarial Networks (GANs)

Real-time rendering and Edge Computing

Real-time rendering and Edge Computing

Real-time rendering and edge computing are crucial for object manipulation in films as it improves the performance and reduce latency. Cloud-edge collaborative rendering models can significantly decrease network and interaction latency while improving frame rates in complex lighting scenarios (He et al., 2023). By processing data closer to the source, edge computing significantly reduces latency, making real-time edits smoother and more responsive, especially for tasks like object tracking and environment updates. Edge computing systems for real-time object tracking support compute-intensive tasks between IoT devices and edge servers, which enhances power consumption while prioritizing network conditions and user needs (Zhao et al., 2018). These technologies are essential for applications where delays could disrupt the immersive experience, such as interactive media, live broadcast editing, and manipulating heavy objects in real-time.

Real-time rendering and edge computing are crucial for object manipulation in films as it improves the performance and reduce latency. Cloud-edge collaborative rendering models can significantly decrease network and interaction latency while improving frame rates in complex lighting scenarios (He et al., 2023). By processing data closer to the source, edge computing significantly reduces latency, making real-time edits smoother and more responsive, especially for tasks like object tracking and environment updates. Edge computing systems for real-time object tracking support compute-intensive tasks between IoT devices and edge servers, which enhances power consumption while prioritizing network conditions and user needs (Zhao et al., 2018). These technologies are essential for applications where delays could disrupt the immersive experience, such as interactive media, live broadcast editing, and manipulating heavy objects in real-time.

Challenges in Frame Consistency and Continuity

Challenges in Frame Consistency and Continuity

AI-based object recognition and modification can also face challenges in maintaining continuity and coherence across frames, as making changes to an object in one frame must be consistently applied to subsequent frames. This requires the AI to track and modify objects accurately over hundreds or thousands of frames, accounting for changes in perspective, lighting, and object movement.

Research shows that even state-of-the-art object detectors can produce inconsistent results due to small image distortions, with consistency ranging from 83.2% to 97.1% on different video datasets (Tung et al., 2022). Maintaining frame continuity is crucial in interactive media because inconsistencies can disrupt the immersive experience. Advanced algorithms such as temporal GANs are currently exploring to improve tracking and consistency, though realtime application remains a challenge due to complex computational resources being required.

AI-based object recognition and modification can also face challenges in maintaining continuity and coherence across frames, as making changes to an object in one frame must be consistently applied to subsequent frames. This requires the AI to track and modify objects accurately over hundreds or thousands of frames, accounting for changes in perspective, lighting, and object movement.

Research shows that even state-of-the-art object detectors can produce inconsistent results due to small image distortions, with consistency ranging from 83.2% to 97.1% on different video datasets (Tung et al., 2022). Maintaining frame continuity is crucial in interactive media because inconsistencies can disrupt the immersive experience. Advanced algorithms such as temporal GANs are currently exploring to improve tracking and consistency, though realtime application remains a challenge due to complex computational resources being required.

Copyright and Intellectual Property Concerns

Copyright and Intellectual Property Concerns

Using AI to modify or manipulate film objects raises significant copyright and intellectual property concerns, as alterations might have negative effects on rights held by the creators of the original work. Under current copyright law, modifying copyrighted material without permission is generally restricted unless it falls under fair use, as AIgenerated works meet originality requirements for copyright protection, but most jurisdictions lack legal recognition of AI as an independent copyright holder (Zhang, 2023).

Using AI to modify or manipulate film objects raises significant copyright and intellectual property concerns, as alterations might have negative effects on rights held by the creators of the original work. Under current copyright law, modifying copyrighted material without permission is generally restricted unless it falls under fair use, as AIgenerated works meet originality requirements for copyright protection, but most jurisdictions lack legal recognition of AI as an independent copyright holder (Zhang, 2023).

Creative Ownership and Authorship

Creative Ownership and Authorship

The ability to change or manipulate elements in films or other media raises important questions about authorship and creative ownership. Directors, writers, and producers may feel that allowing alterations or changes will disrupt the intended story or purpose of the film. On the other hand, some argue that enabling viewers to modify content introduces a new way of interactive storytelling, enhancing engagement and personalizing experiences. This debate between traditional artistic ownership and emerging collaborative media will likely shape the ongoing debates around creative control and audience involvement in AI-modified content.

The ability to change or manipulate elements in films or other media raises important questions about authorship and creative ownership. Directors, writers, and producers may feel that allowing alterations or changes will disrupt the intended story or purpose of the film. On the other hand, some argue that enabling viewers to modify content introduces a new way of interactive storytelling, enhancing engagement and personalizing experiences. This debate between traditional artistic ownership and emerging collaborative media will likely shape the ongoing debates around creative control and audience involvement in AI-modified content.

Potential for misuse and ethical implications

Potential for misuse and ethical implications

AI has the capability to alter or generate various forms of art, however, it also provides significant risks of misuse, including unauthorized modifications that may lead to false narratives or harmful representations. AI-driven modifications can lead to malicious purposes, such as generating misinformation, deepfakes, or offensive edits, which requires responsibility regarding ethical guidelines for such technologies. Deepfake technology, for example, offers benefits in filmmaking and digital art but simultaneously raises concerns about misinformation and privacy violations (Maniyal & Kumar, 2024). Ensuring that these AI tools are applied with legal and ethical boundaries will require strict regulations to prevent unauthorized or deceptive modifications.

AI has the capability to alter or generate various forms of art, however, it also provides significant risks of misuse, including unauthorized modifications that may lead to false narratives or harmful representations. AI-driven modifications can lead to malicious purposes, such as generating misinformation, deepfakes, or offensive edits, which requires responsibility regarding ethical guidelines for such technologies. Deepfake technology, for example, offers benefits in filmmaking and digital art but simultaneously raises concerns about misinformation and privacy violations (Maniyal & Kumar, 2024). Ensuring that these AI tools are applied with legal and ethical boundaries will require strict regulations to prevent unauthorized or deceptive modifications.

Conclusion

Conclusion

The ability to manipulate objects in films through AI provides infinite possibilities for viewer engagement and storytelling innovation, but it also has significant technical, legal, and ethical challenges. While the ongoing advanced technologies show potential for creating immersive and interactive experiences, further research is needed to fully understand the complexities of creating a fully interactive AI-based object manipulation platform. Future developments should focus on building legal frameworks and ethical guidelines to ensure the proper use of AI-driven object manipulation in film, to maintain a balance between creative freedom and consumer rights.

The ability to manipulate objects in films through AI provides infinite possibilities for viewer engagement and storytelling innovation, but it also has significant technical, legal, and ethical challenges. While the ongoing advanced technologies show potential for creating immersive and interactive experiences, further research is needed to fully understand the complexities of creating a fully interactive AI-based object manipulation platform. Future developments should focus on building legal frameworks and ethical guidelines to ensure the proper use of AI-driven object manipulation in film, to maintain a balance between creative freedom and consumer rights.

References

References

Nautiyal, R., Jha, R. S., Kathuria, S., Chanti, Y., Rathor, N., & Gupta, M. (2023). Intersection of Artificial Intelligence (AI) in Entertainment Sector. 2023 4th International Conference on Smart Electronics and Communication (ICOSEC), 1273– 1278. https://doi.org/10.1109/ICOSEC58147.2023.10275 976

AI & Entertainment: The Revolution of Customer Experience. (n.d.). Lecture Notes in Education Psychology and Public Media. https://doi.org/10.54254/2753-7048/30/20231719

Lees, D. (2024). Deepfakes in documentary film production: images of deception in the representation of the real. Studies in Documentary Film, 18(2), 108–129. https://doi.org/10.1080/17503280.2023.2284680

Noel, AJ. “Netflix Tries Its Hand at Adult Interactive Media with Black Mirror: Bandersnatch.” Netflix Tries Its Hand at Adult Interactive Media with Black Mirror: Bandersnatch, blog.vmgstudios.com/netflix-triesits-hand-at-adult-interactive-media-with-blackmirror-bandersnatch.

Wang, T.-C., Liu, M.-Y., Zhu, J.-Y., Tao, A., Kautz, J., & Catanzaro, B. (2018). High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8798– 8807. https://doi.org/10.1109/CVPR.2018.00917

Ashwith, A., Sethuram, A., Nasreen, A., Shobha, G., & Iyengar, S. S. (2022). Deep Learning-Based Object Recognition in Video Sequences. International Journal of Computing and Digital System (Jāmiʻat al-Baḥrayn. Markaz al-Nashr alʻIlmī), 11(1), 177–186. https://doi.org/10.12785/ijcds/110114

He, Z., Yang, Y., Li, Z., Chen, N., Zhang, A., Su, Y., Sun, Y., Liu, D., Du, B., Fan, H., Lu, T., & Wang, T. (n.d.). CERender: Real-Time Cloud Rendering Based on Cloud-Edge Collaboration. In Computer Supported Cooperative Work and Social Computing (pp. 491–501). Springer Nature Singapore. https://doi.org/10.1007/978-981-99- 9637-7_36

Zhao, Z., Jiang, Z., Ling, N., Shuai, X., Xing, G., Ramachandran, G. S., & Krishnamachari, B. (2018). ECRT: An Edge Computing System for Real-Time Image-based Object Tracking. Proceedings of the 16th ACM Conference on Embedded Networked Sensor Systems, 394–395. https://doi.org/10.1145/3274783.3275199

Tung, C., Goel, A., Bordwell, F., Eliopoulos, N., Hu, X., Lu, Y.-H., & Thiruvathukal, G. K. (2022). Why Accuracy is Not Enough: The Need for Consistency in Object Detection. In IEEE multimedia (Vol. 29, Number 3, pp. 8–16). IEEE. https://doi.org/10.1109/MMUL.2022.3175239

Zhang, Feiyang. (2023). Copyright Issues in Artificial Intelligence: A Comprehensive Examination from the Perspectives of Subject and Object. Communications in Humanities Research. 15. 172-182. 10.54254/2753-7064/15/20230664.

Maniyal, Vishal, and Vijay Kumar. “Unveiling the Deepfake Dilemma: Framework, Classification, and Future Trajectories.” IT Professional, vol. 26, no. 2, 2024, pp. 32–38, https://doi.org/10.1109/MITP.2024.3369948. .

Nautiyal, R., Jha, R. S., Kathuria, S., Chanti, Y., Rathor, N., & Gupta, M. (2023). Intersection of Artificial Intelligence (AI) in Entertainment Sector. 2023 4th International Conference on Smart Electronics and Communication (ICOSEC), 1273– 1278. https://doi.org/10.1109/ICOSEC58147.2023.10275 976

AI & Entertainment: The Revolution of Customer Experience. (n.d.). Lecture Notes in Education Psychology and Public Media. https://doi.org/10.54254/2753-7048/30/20231719

Lees, D. (2024). Deepfakes in documentary film production: images of deception in the representation of the real. Studies in Documentary Film, 18(2), 108–129. https://doi.org/10.1080/17503280.2023.2284680

Noel, AJ. “Netflix Tries Its Hand at Adult Interactive Media with Black Mirror: Bandersnatch.” Netflix Tries Its Hand at Adult Interactive Media with Black Mirror: Bandersnatch, blog.vmgstudios.com/netflix-triesits-hand-at-adult-interactive-media-with-blackmirror-bandersnatch.

Wang, T.-C., Liu, M.-Y., Zhu, J.-Y., Tao, A., Kautz, J., & Catanzaro, B. (2018). High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8798– 8807. https://doi.org/10.1109/CVPR.2018.00917

Ashwith, A., Sethuram, A., Nasreen, A., Shobha, G., & Iyengar, S. S. (2022). Deep Learning-Based Object Recognition in Video Sequences. International Journal of Computing and Digital System (Jāmiʻat al-Baḥrayn. Markaz al-Nashr alʻIlmī), 11(1), 177–186. https://doi.org/10.12785/ijcds/110114

He, Z., Yang, Y., Li, Z., Chen, N., Zhang, A., Su, Y., Sun, Y., Liu, D., Du, B., Fan, H., Lu, T., & Wang, T. (n.d.). CERender: Real-Time Cloud Rendering Based on Cloud-Edge Collaboration. In Computer Supported Cooperative Work and Social Computing (pp. 491–501). Springer Nature Singapore. https://doi.org/10.1007/978-981-99- 9637-7_36

Zhao, Z., Jiang, Z., Ling, N., Shuai, X., Xing, G., Ramachandran, G. S., & Krishnamachari, B. (2018). ECRT: An Edge Computing System for Real-Time Image-based Object Tracking. Proceedings of the 16th ACM Conference on Embedded Networked Sensor Systems, 394–395. https://doi.org/10.1145/3274783.3275199

Tung, C., Goel, A., Bordwell, F., Eliopoulos, N., Hu, X., Lu, Y.-H., & Thiruvathukal, G. K. (2022). Why Accuracy is Not Enough: The Need for Consistency in Object Detection. In IEEE multimedia (Vol. 29, Number 3, pp. 8–16). IEEE. https://doi.org/10.1109/MMUL.2022.3175239

Zhang, Feiyang. (2023). Copyright Issues in Artificial Intelligence: A Comprehensive Examination from the Perspectives of Subject and Object. Communications in Humanities Research. 15. 172-182. 10.54254/2753-7064/15/20230664.

Maniyal, Vishal, and Vijay Kumar. “Unveiling the Deepfake Dilemma: Framework, Classification, and Future Trajectories.” IT Professional, vol. 26, no. 2, 2024, pp. 32–38, https://doi.org/10.1109/MITP.2024.3369948. .